Azure OpenAI Fine-Tuning

Introduction

Fine-tuning GPT-3.5 or GPT-4 models on Microsoft Azure using W&B allows for detailed tracking and analysis of model performance. This guide extends the concepts from the OpenAI Fine-Tuning guide with specific steps and features for Azure OpenAI.

info

The Weights and Biases fine-tuning integration works with openai >= 1.0. Please install the latest version of openai by doing pip install -U openai.

Prerequisites

- Azure OpenAI service set up as per official Azure documentation.

- Latest versions of

openai,wandb, and other required libraries installed.

Sync Azure OpenAI fine-tuning results in W&B in 2 lines

from openai import AzureOpenAI

# Connect to Azure OpenAI

client = AzureOpenAI(

azure_endpoint=os.getenv("AZURE_OPENAI_ENDPOINT"),

api_key=os.getenv("AZURE_OPENAI_KEY"),

)

# Create and validate your training and validation datasets in JSONL format,

# upload them via the client,

# and start a fine-tuning job.

from wandb.integration.openai.fine_tuning import WandbLogger

# Sync your fine-tuning results with W&B!

WandbLogger.sync(

fine_tune_job_id=job_id, openai_client=client, project="your_project_name"

)

Check out interactive examples

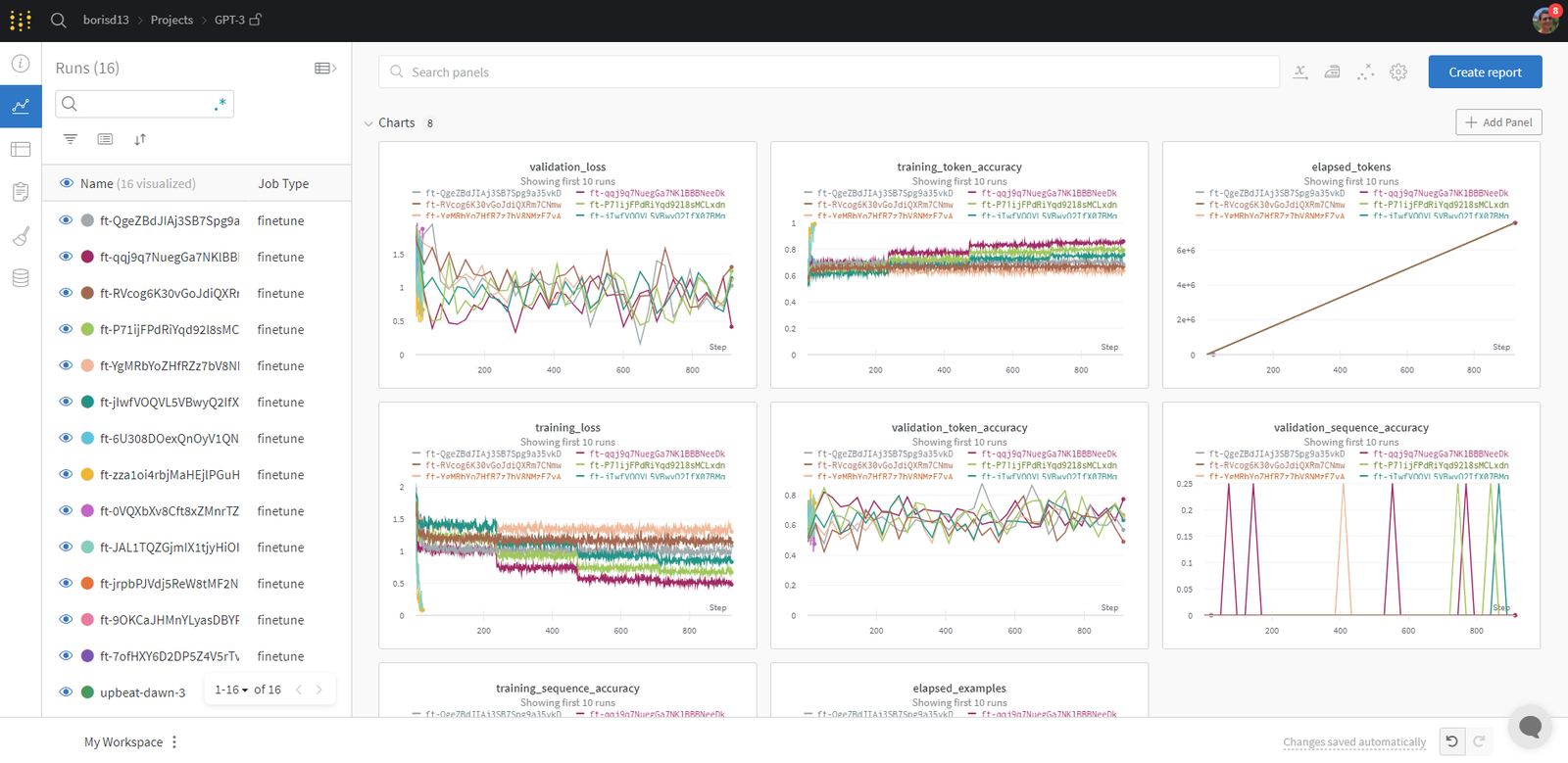

Visualization and versioning in W&B

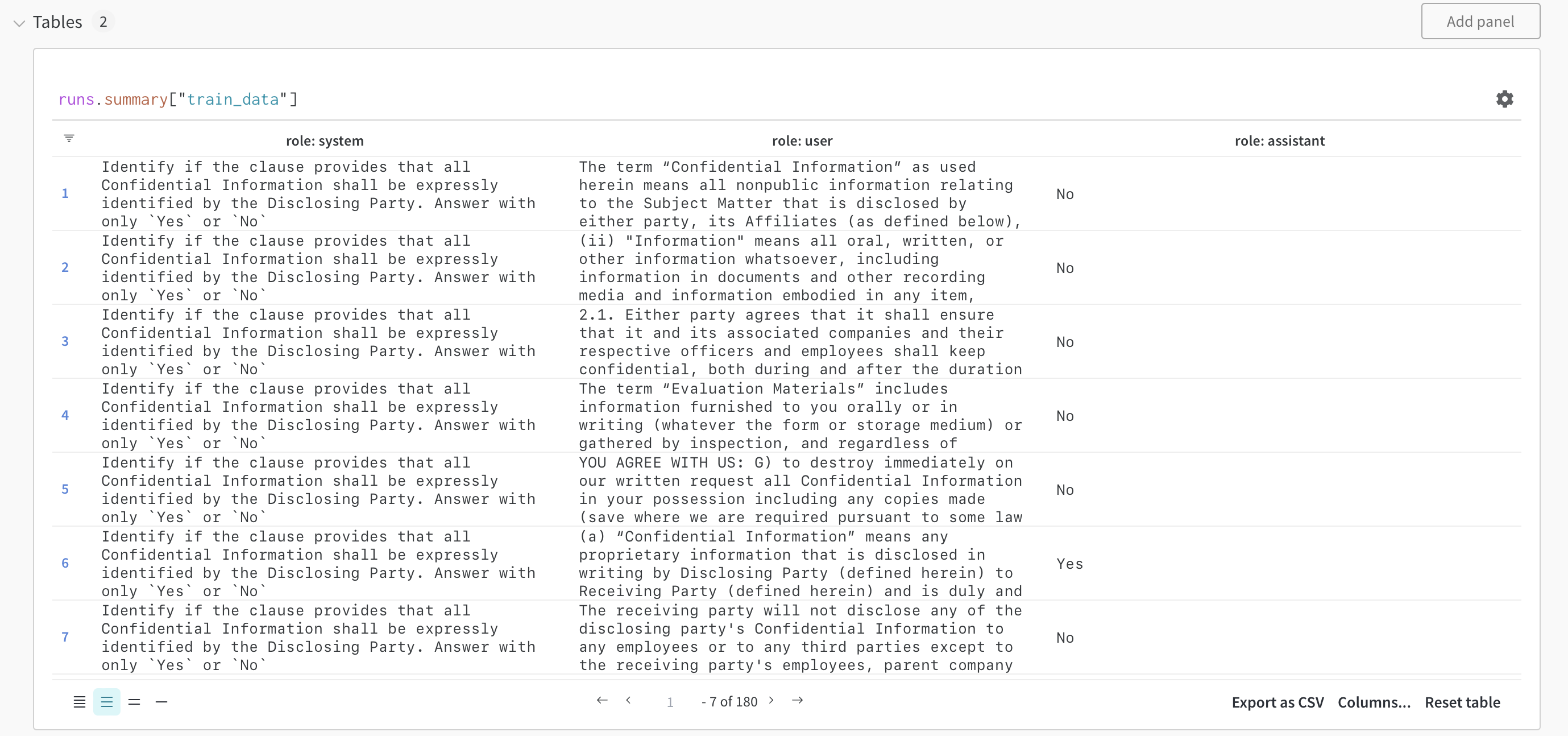

- Utilize W&B for versioning and visualizing training and validation data as Tables.

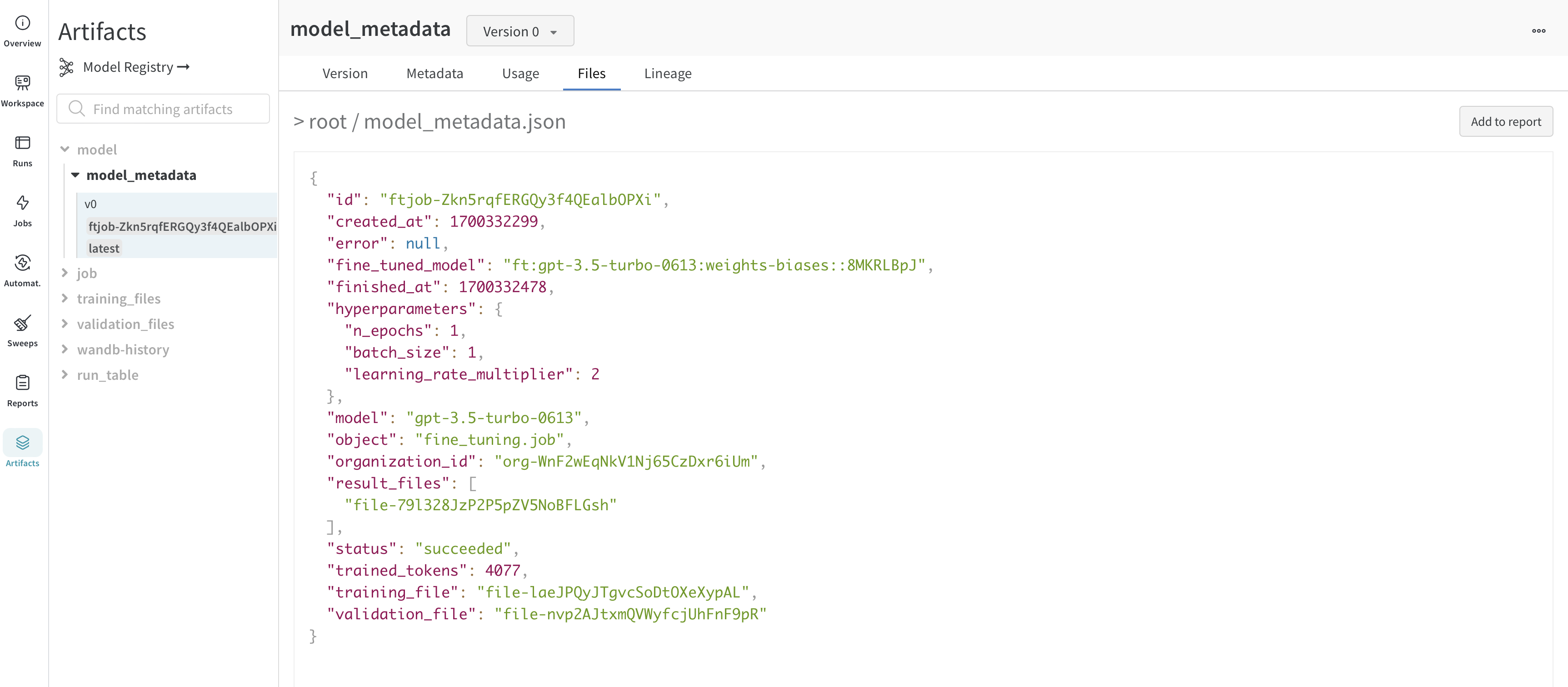

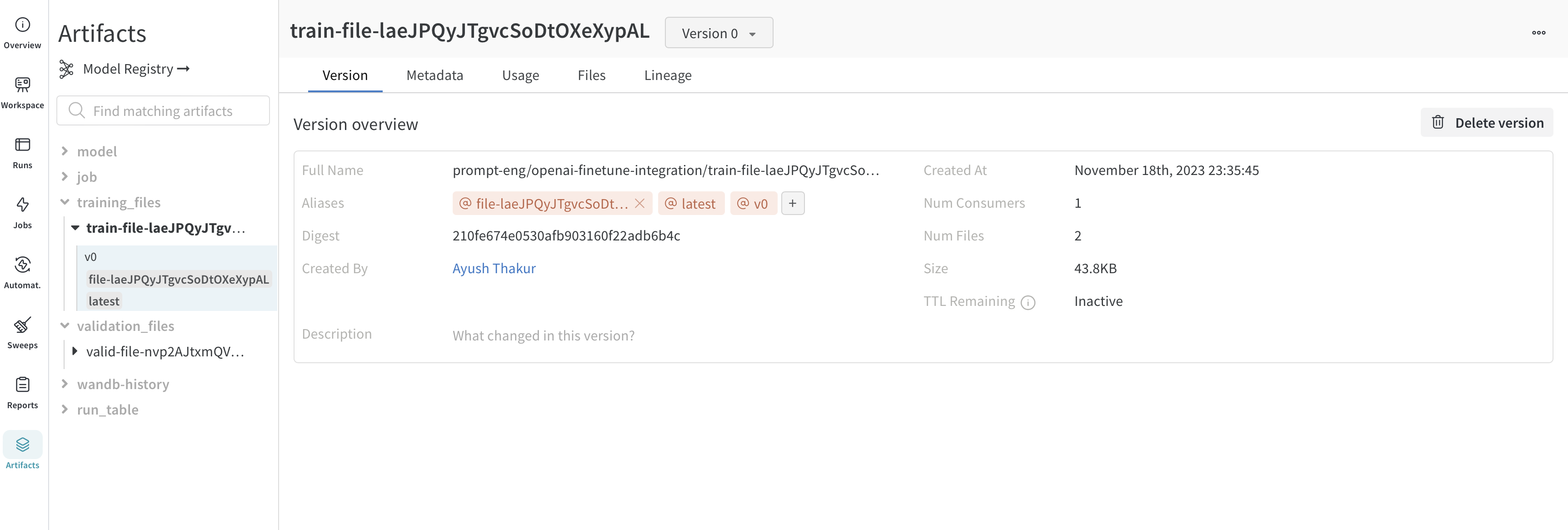

- The datasets and model metadata are versioned as W&B Artifacts, allowing for efficient tracking and version control.

Retrieving the fine-tuned model

- The fine-tuned model ID is retrievable from Azure OpenAI and is logged as a part of model metadata in W&B.